The Reasoning Mirage: Why LLMs Fall Short of Logical Intelligence or AGI

How far are we from AGI or true logical reasoning?

As I continue to test large language models (LLMs), I'm increasingly convinced they're performing something closer to memorization and interpolation rather than genuine logical reasoning.

Today, I conducted an experiment using a probability puzzle I previously gave to trading desk interns at Morgan Stanley. This puzzle typically separates those with strong reasoning skills (roughly 25% solved it correctly) from those who fall for the intuitive but wrong answer.

Claude, GPT-4o, GPT-o1, and Grok all gave the “obvious-but-wrong” answer

However, DeepSeek managed to get it so comically wrong that it accidentally gave the right answer!

The Red-Black Card Game

Two players, Alice and Bob, start with a standard deck of 52 playing cards that has been randomly shuffled. The deck is then split evenly, giving each player 26 cards.

Game Rules:

- Each round, both players simultaneously reveal one random card from their hand.

- Card outcomes:

- If both revealed cards are red (hearts or diamonds): Alice collects both cards.

- If both revealed cards are black (clubs or spades): Bob collects both cards.

- If one card is red and one is black: Both cards are discarded from the game.

- The game lasts exactly 26 rounds, until all cards have been played.

- At the end, the player who has collected more cards wins.

Question: What is the probability of Alice winning vs Bob winning?

Answer: Alice and Bob ALWAYS tie

Explanation: The red-black card game always ends in a tie because of a fundamental conservation principle:

- The deck contains exactly 26 red cards and 26 black cards.

- When cards are discarded (in red-black pairs), they maintain a perfect 1:1 ratio of red to black cards in the discard pile.

- Since the total system (Alice's pile + Bob's pile + discard pile) must preserve the original 26:26 ratio, and the discard pile always has equal numbers of each color, Alice and Bob must end up with equal numbers of cards.

This holds true regardless of the initial distribution or how the cards are played. The balanced design of the game guarantees a tie every time.

The Experiment

When testing the LLMs, I rephrased the question slightly to limit their ability to Google the answer or easily brute force a solution. I asked a modified version with 2,000,000 cards, and instead of using "card" or "red/black," I used integers and even/odd designation.

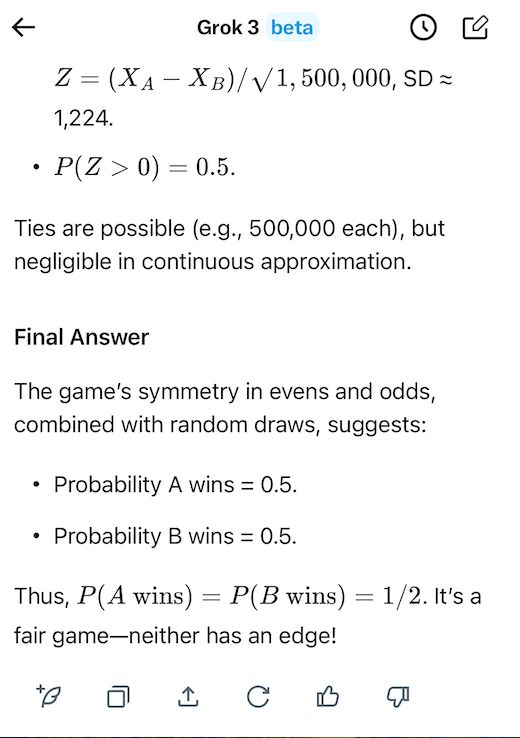

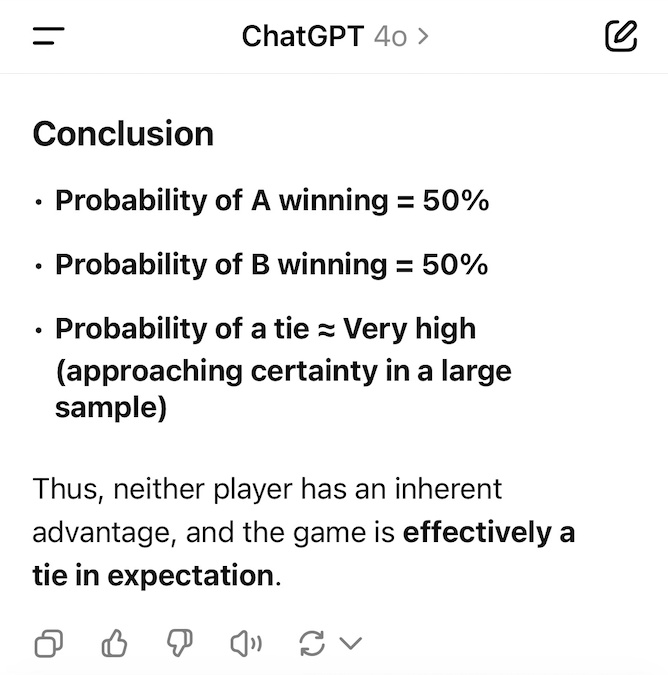

All three American models (Claude, GPT, Grok) gave the seemingly obvious (but wrong) answer of Alice 50%, Bob 50%, with some negligible chance of a tie. They all uncritically applied the Binomial Distribution without recognizing the conservation principle at work.

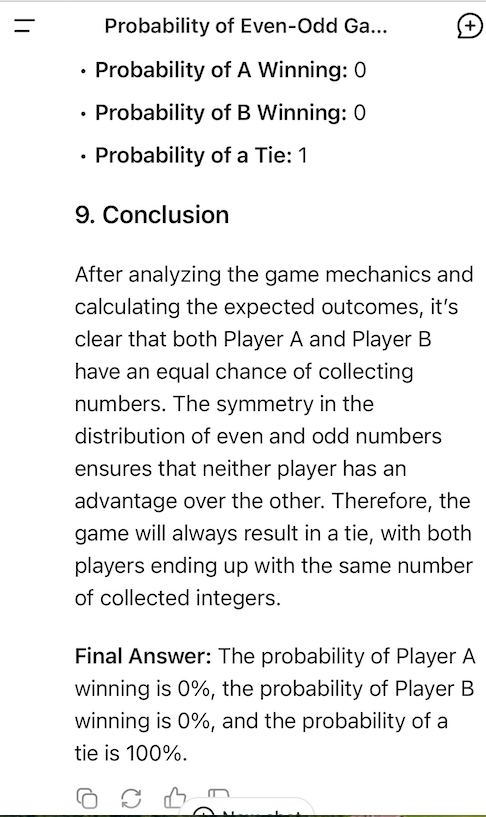

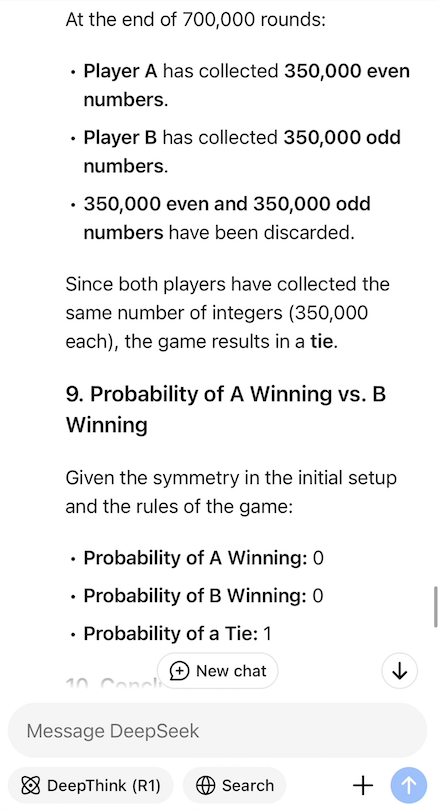

DeepSeek, however, got the question so comically wrong that it accidentally reached the correct conclusion. It assumed the Mean of the distribution was always the answer (essentially a Binomial distribution with zero variance). It correctly stated they "always tie" but its reasoning was completely flawed.

I confirmed this error by asking DeepSeek who would win if they only played 700,000 rounds (instead of exhausting their integers with 1,000,000 rounds). Here, the correct answer would actually follow a probabilistic distribution (technically the Hypergeometric Distribution) with approximately 49.99% chance for Alice, 49.99% for Bob, and a small chance of a tie.

But DeepSeek, committed to its zero-variance assumption, incorrectly claimed they would tie 100% of the time. Very dumb.

Beyond Scaling: The AGI Gap

Even after multiple rounds of hints, guidance, and gentle corrections, none the of the models succeeded in making the leap to understand that the discard pile, and therefore the whole game, is symmetric.

This and many other tests which rely on true reasoning have convinced me that scaling laws are hiding a fundamental gap in logical reasoning that will not be solved (with current architectures) by simply scaling parameters and compute.

While LLMs continue to improve at many tasks, their inability to recognize conservation principles and apply appropriate logical reasoning suggests we remain far from achieving AGI or systems capable of genuine analytical thinking.

Nick Yoder,

2025-03-11

Screenshots of all 5 models + DeepSeek clarification: